Investigating the Interaction of Novices and Non-Novices with the Alloy Formal Specification Language

Project Objective

To understand how novices and non-novices perform in bug fixing and model building tasks in Alloy.

Study Type:

Exploratory

Research Instrument:

Tasks and Questionnaires

Date:

2019 – 2022

Methods Used

- Task-based performance measures

- Examination of logs for detailed user actions

- Cognitive measures

- Post-task questionnaires

Rationale: These methods were chosen to obtain a combination of objective performance metrics, granular user interaction data, and subjective feedback on Alloy tasks.

Role

Principal Researcher

I framed the research questions, chose the methodologies, analyzed the data, and presented the findings.

Key Findings

- Novice vs Non-Novice Performance: Non-novices outperformed novices by 54% on average, measured by correctness of tasks. On average, non-novices also completed the tasks 32 minutes earlier than novices.

- Difficulty Building Models: Both user groups found building an Alloy model from scratch to be challenging. Writing

predicates to create dynamic properties in Alloy was the most difficult task for both groups. Non-novices were more successful in writing static properties and writing assertions. - Syntactic vs Semantic: Participants from both groups exhibited better performance on syntactic tasks than on semantic tasks.

- Cognitive Measures and Alloy Comprehension: Spatial cognition and Alloy bug fixing are correlated, indicating importance of the cognitive skill in understanding Alloy and its mathematical concepts.

Process

- Preparation: Created a detailed research plan outlining the tasks and metrics of interest.

- Recruitment: Recruited 30 participants with varying levels of familiarity with Alloy.

- Execution: Participants were given specific tasks to solve in Alloy, and their interactions with the analyzer were logged. They also worked on two cognitive tasks, mental rotation and operation span tasks, to measure spatial cognition and working memory, respectively.

- Analysis: Used a combination of quantitative metrics and qualitative observations from the logs to identify patterns. Used Mann-Whitney U test to compare different metrics in the two groups and ran Spearman’s correlation to observe the relationship between cognitive tasks and Alloy tasks. I used the JASP software to run the statistical tests.

- Reporting: Presented findings through a comprehensive research paper in Formalise 2023, breaking down the results by research questions. The paper won the best paper award.

Recommendations

- Teaching systematic methods for debugging Alloy models and fixing semantic bugs, such as identifying an over-constraint that results in unsatisfiability of a model or a missing constraint that causes an assertion failure.

- Automated debugger extension to Alloy analyzer.

- Introduced usability improvements for Alloy Analyzer visualizations, focusing on clearer instance differentiation and better highlighting for discrepancies.

- The significant positive correlation between the bug fixing task accuracy and mental rotation score suggests that people with such skills might be better suited to understand and work on Alloy models.

Visuals

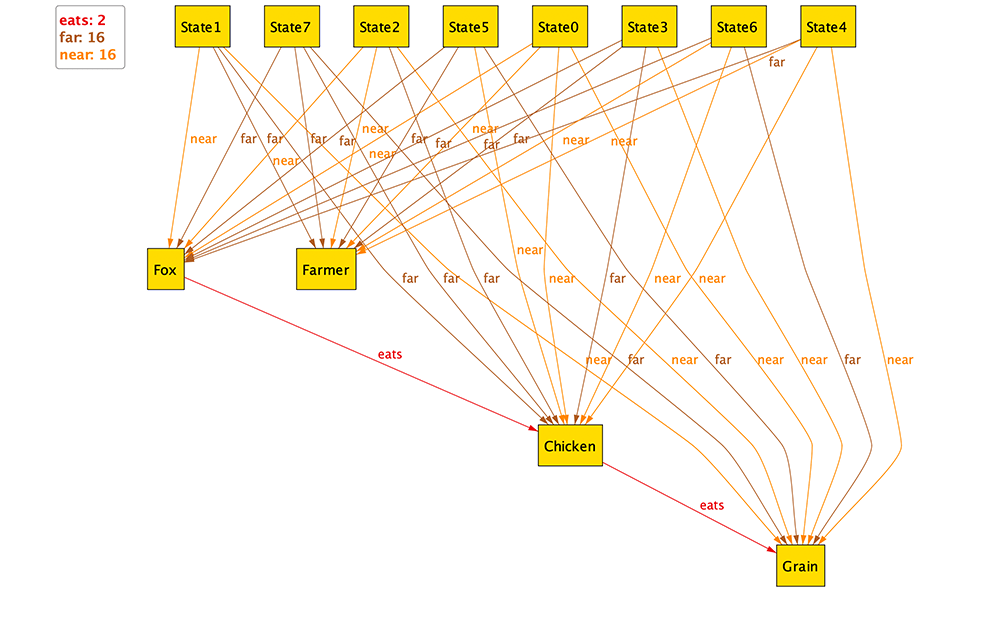

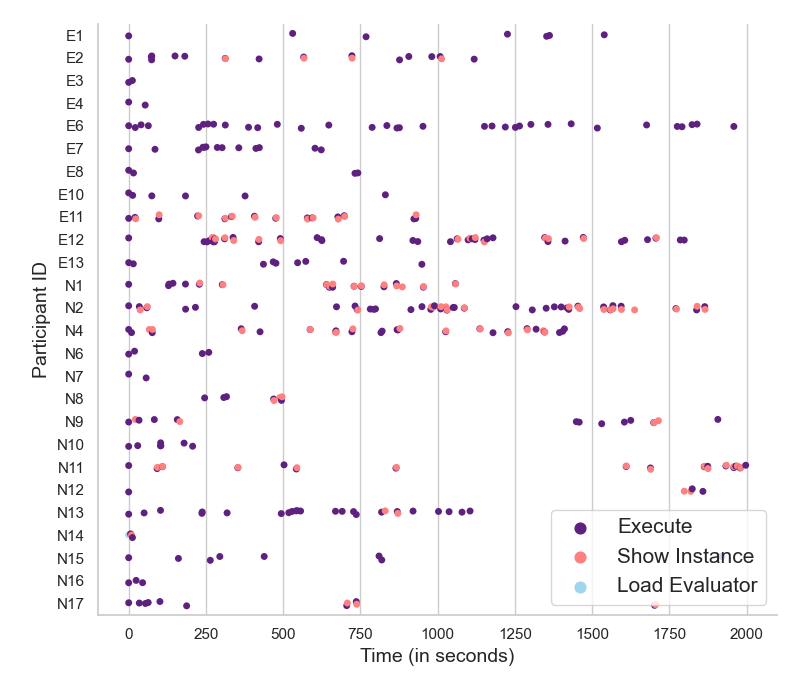

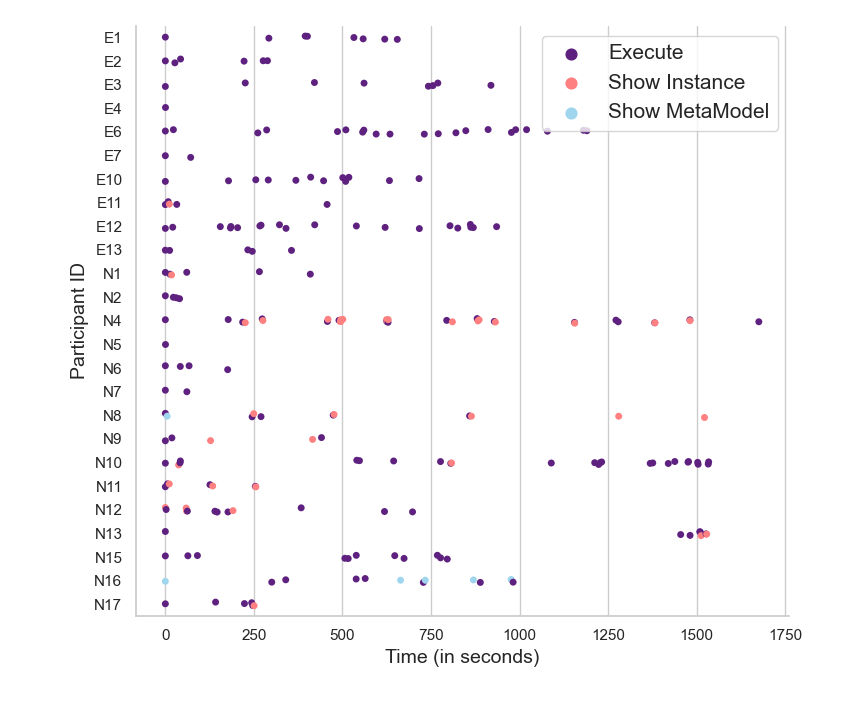

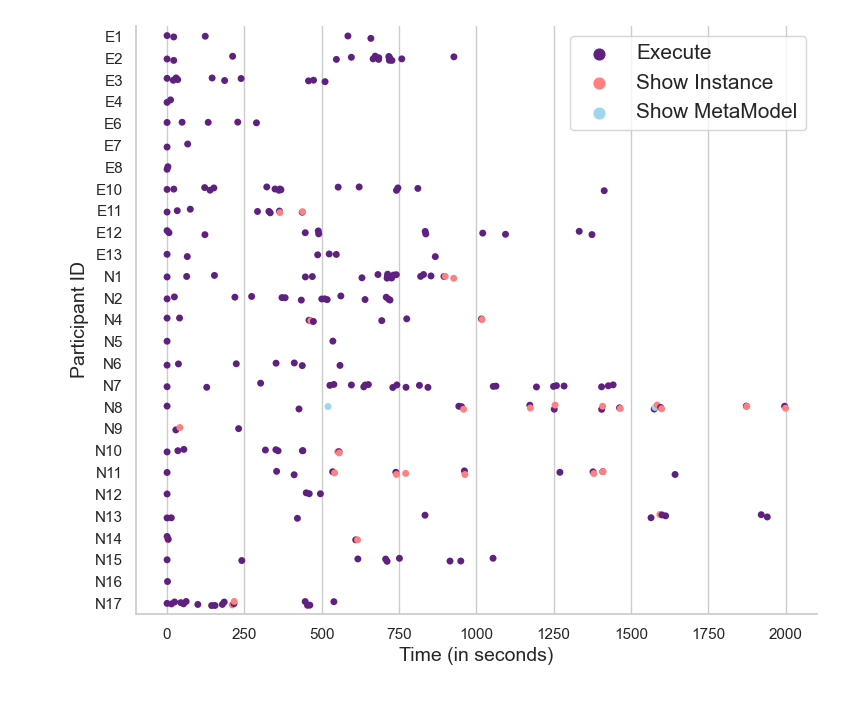

The following plots show the patterns of execution and model finding extracted from the participants’ interaction logs. The plots visualize the consecutive nature of executions while working on bug finding tasks, and the differences between the usage patterns of novice and non-novices. In the Farmer and Grade tasks, novices executed the models more often than non-novices.

Reflection

The study shed light on the distinctive challenges both novices and experienced users face with Alloy. While the results were enlightening, a more extensive participant base might have provided a richer data set. For future studies, real-time monitoring of participants could offer deeper insights into their problem-solving strategies.