Sentiment Analysis in Software Engineering: A Comparative Study of Stack Overflow Questions

Project Objective

To examine how developers perceive sentiment in Stack Overflow questions and how different sentiment analysis tools, including those specifically designed for Software Engineering, interpret these sentiments in comparison to developer perceptions.

Study Type:

Comparative and Qualitative Study

Research Instrument:

Questionnaire

Date:

2020 – 2021

Methods Used

- Sentiment analysis on question titles and text from Stack Overflow (SO).

- Calculation of average ratings for each sentence to analyze the combination of title text and question body.

- Comparison of results from various sentiment analysis tools with developer ratings.

- Qualitative analysis of the reasoning participants provided for their ratings.

Role

Researcher

I analyzed the data, and presented the findings in the form of a research paper and a conference presentation.

Key Findings

- Tools and User Comparison: Sentiment analysis on the combination of SO question titles and text was more aligned with average user ratings, compared to only SO question title text.

- Tool Comparison: Tools specifically designed for software engineering texts (like SentiStrength-SE, SentiCR, and Senti4SD) performed better than general-purpose tools.

- Sentiment Interpretation Factors: Developers considered factors like excitement, willingness to learn, humility, directness, and casual language when interpreting question sentiment.

Process

- Preparation: Our team selected and extracted question titles and text from Stack Overflow.

- Recruitment: Our team recruited 10 developers for the study on Stack Overflow.

- Execution: I applied various sentiment analysis tools on the data, including general-purpose tools and those tailored for software engineering texts.

- Analysis: I drew comparisons between tool outputs and developer ratings and qualitatively analyzed the developer reasoning behind their ratings.

- Reporting: I presented the findings in the SEMotion workshop and in a paper published in the proceedings of the workshop.

Recommendations

- Recommended that sentiment analysis tools tailored for software engineering texts be used for more accurate sentiment detection in software engineering contexts. The study may impact the choice of sentiment analysis tools in future software engineering research.

Visuals

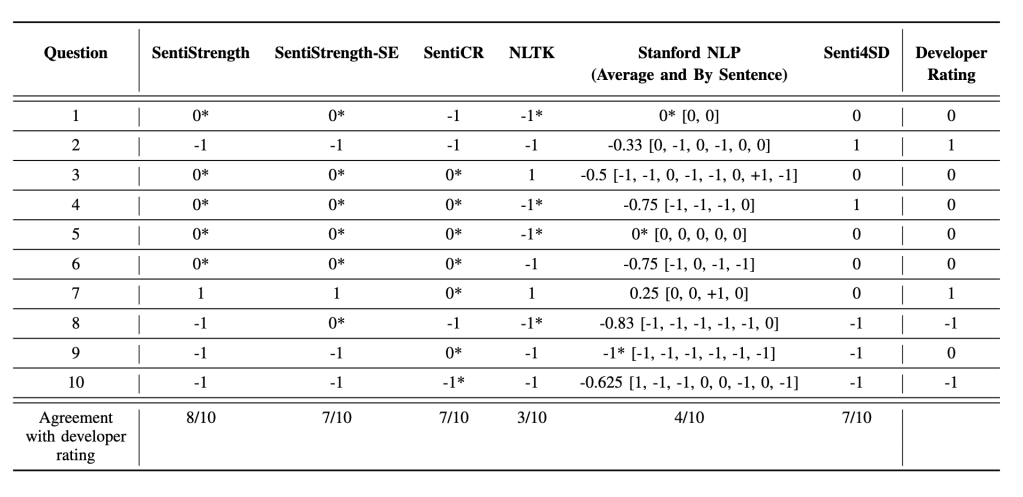

The following table shows the results on the comparison of average developer sentiment rating for title text and question body of SO questions and the sentiment analysis tools. “-1” indicates negative sentiment, “0” indicates neutral sentiment, and “1” indicates positive sentiment.

* indicates that the sentiment of SO question Title Text and question Body is the same as question Title Text.

We can clearly see that the three software engineering sentiment analysis tools perform better than the general purpose tools.

Reflection

The paper concludes that software engineering specific sentiment analysis tools align more closely with developer sentiments than general-purpose tools, suggesting the importance of domain specificity. Future work plans to delve deeper into the discrepancies observed.